Our SEO landing pages are built with a Grails application that has become more difficult to maintain over time. Some time ago, we decided to rebuild our platforms on a new tech stack around a Next.js application, called seo-pages. We opted for building brochure widgets first instead of rebuilding all pages at once. This way, we could check changes quicker and react better to problems that could appear in production.

We approached this project in the same way as we did for other projects: We had three different environments – development, stage, and production. We deployed the application as a Debian package on an EC2 server. You could see the two problems that appeared from this approach:

- Different environments can lead to different application behavior. You might be familiar with the excuse, “it works on my machine.” This did not boost confidence in the code changes.

- It takes time to build a Debian package with all its dependencies. The deployment of this package takes some time, too, as a new EC2 instance has to boot up first before the package can be installed. In our case, it took at least 20 to 30 minutes after we started the deployment to see results in production.

Fortunately, there have been promising solutions for these problems: Docker and Kubernetes. Our Ops team has provided an environment that should simplify Kubernetes deployments within our company. In this post, we want to describe how we achieved the deployment of our seo-pages application in this environment.

Provide One Environment for Development and Production

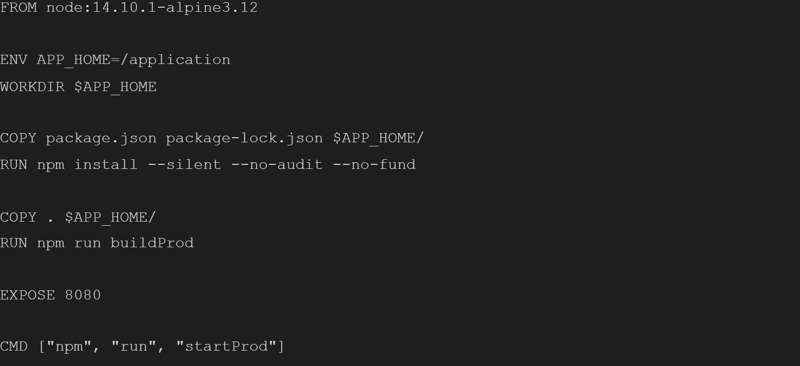

To tackle the first problem, we had to find a solution that could provide one environment throughout the software development process. We went for Docker, which builds images with the application and all its dependencies, similar to a Debian package. A basic Dockerfile has existed in the project since the beginning. So, we only had to adjust this file to fit our needs. Furthermore, we applied some best practices to make the image as small as possible and limit the build time as good as possible.

Dockerfile of seo-pages

As you can see in the code above, there have been distinct steps for dependency resolution and building the application. This way, we have made use of layer caching, which decreased the image build time. The initial build time was relatively high, but after caching the base image and the application dependencies, the build time was under two minutes, making it acceptable to use Docker locally during the development process.

Build the Deployment Pipeline

Our Docker image was ready, so it was time to tackle the second problem. Our Ops team has worked on a Kubernetes environment for months to make the lives of the application developers easier. As a result, we only had to include the Dockerfile from before in our project, write a Terraform configuration for our application and configure the deployment with Helm values. We were able to configure the deployment globally and for each of our platforms, Bonial, kaufDA and MeinProspekt individually.

After going through all the code reviews and all the changes were applied, the moment of truth arrived: did the deployment actually work?

The Moment of Truth

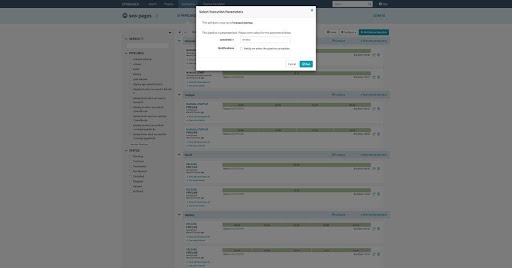

The deployment pipeline was visible in our Spinnaker, so we could deploy our application in the Kubernetes cluster and test it.

Deployment Pipeline of Our Application in Spinnaker

The initial deployment took some time. The base image and all dependencies had to be downloaded first. After they were cached, the build process took between one and two minutes. Overall, less than five minutes was spent to deploy application changes in production – a massive improvement compared to our old deployment. Rollbacks in case of errors took even less time.

However, fast deployments are nothing if the application does not work. Before we used the Kubernetes deployment, we tested it thoroughly to ensure that everything worked as before. The testing process mainly consisted of manual testing of the endpoints. However, we were interested in the performance of the Kubernetes setup too. Therefore, we decided to run a quick and simple load test with Locust.

CPU Usage per Application Instance During the Load Test

We were mainly interested in the boot times of new instances in case of higher-than-usual traffic. As you can see in the image above, Kubernetes quickly created new application instances that could handle the load during the test. Our API went down during the test because of all the requests coming from the widgets. The last step involved changing DNS entries to use the Kubernetes deployment. At the time of writing this post, this has been achieved for Bonial successfully. All the brochures and offers you see on Bonial were served from our Kubernetes deployment. Our German platforms will follow soon.

Conclusion

Since we had used containers, we have had the same environment during the whole development process, which has boosted our confidence in the code changes we have made. Furthermore, Kubernetes fulfilled our expectation of low boot times when it comes to traffic spikes. We were also impressed by how easy it was to add a Kubernetes deployment for our application.

In our eyes, the current Kubernetes setup is mature enough to deploy user-facing applications. However, our Ops team still finds opportunities to improve the setup. We also plan to enhance the deployment of our application in the upcoming weeks.

Have you already used Docker and/or Kubernetes? What are your experiences? If you have not used either, do you plan to make use of them?

Author

This article was written by Konstantin Bork, a Software Engineering Working Student at Bonial.